Sitemap Optimization Guide for Enhanced AI Discovery

Only a well-structured sitemap can unlock your website’s full potential with AI search engines. With over 90 percent of search traffic going to sites that are easily crawled and indexed, even small issues with your sitemap can hold back your online visibility. Reviewing how search engines and AI crawlers access your site is more important than ever. This step-by-step guide will show you the latest strategies to improve your sitemap, boost crawlability, and help your content reach a wider audience.

Table of Contents

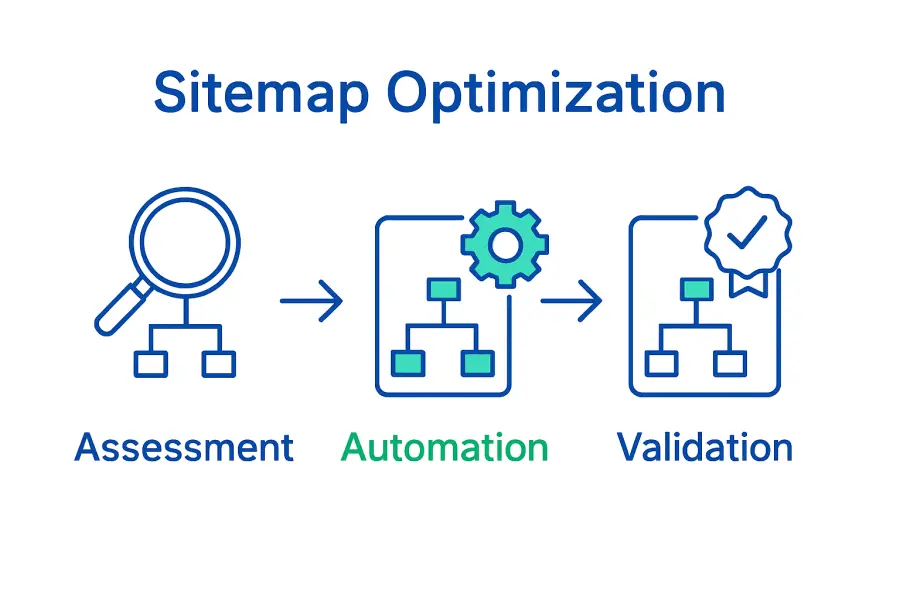

- Step 1: Assess Current Sitemap and Crawlability

- Step 2: Integrate Automated Sitemap Solutions

- Step 3: Refine Sitemap Structure for AI Relevance

- Step 4: Enable Ongoing Sitemap and Content Updates

- Step 5: Validate AI and Search Engine Discoverability

Quick Summary

| Key Point | Explanation |

|---|---|

| 1. Assess your current sitemap’s crawlability | Evaluate your sitemap to ensure it contains all important pages and allows easy access for search engine crawlers. |

| 2. Implement automated sitemap solutions | Use tools and plugins to automatically generate and update your sitemap, ensuring it reflects the latest website changes. |

| 3. Refine sitemap structure for clarity | Organize your sitemap into logical clusters that help AI systems understand the relationships between different pages. |

| 4. Establish ongoing sitemap updates | Regularly audit and update your sitemap, utilizing automation to keep it in sync with your content changes. |

| 5. Validate discoverability and indexing | Use tools to ensure your website is discoverable by search engines and make necessary adjustments based on reports. |

Step 1: Assess Current Sitemap and Crawlability

In this critical first step, you will evaluate your website’s current sitemap and its ability to be efficiently crawled by AI search engines and web crawlers. Understanding your sitemap’s structure and performance is key to improving your site’s AI discoverability.

According to research from airccse, efficient web crawling depends on dynamically prioritizing web pages within a sitemap. This means analyzing which pages are most significant and ensuring crawlers can access them quickly. Start by locating your current XML sitemap typically found at yourdomain.com/sitemap.xml. Review its completeness and verify that it includes all important pages while excluding unnecessary ones like duplicate content or deprecated pages.

To assess crawlability, use tools like Google Search Console or specialized crawl analysis platforms. Check for potential barriers such as robots.txt restrictions, page load speeds, or complex navigation structures that might impede AI crawler access. As mecs-press research highlights, a well structured sitemap plays a crucial role in facilitating content discovery. Pay special attention to your site architecture ensuring logical page hierarchies and clean internal linking.

Quick warning: Incomplete or poorly structured sitemaps can dramatically reduce your website’s visibility to AI search tools. Regularly audit and update your sitemap to maintain optimal discoverability.

Next, you will learn how to strategically optimize your sitemap for maximum AI recognition and indexing.

Step 2: Integrate Automated Sitemap Solutions

In this step, you will learn how to implement automated solutions that streamline your sitemap generation and maintenance, ensuring optimal AI discoverability without manual intervention. Automated sitemap solutions can dramatically simplify your website optimization process.

According to ubcms, configuring automated XML sitemaps is crucial for improving search engine indexing. Most content management systems and website platforms offer built in modules or plugins that can automatically generate and update your sitemap. For WordPress sites, plugins like Yoast SEO or Google XML Sitemaps can handle this process seamlessly. For custom websites, consider implementing server side scripts or utilizing cloud services that dynamically generate and update your sitemap based on your website content changes.

Research from websites highlights the importance of configuring XML sitemap modules that automatically generate sitemaps conforming to standard specifications. When selecting an automated solution, prioritize tools that offer real time updates, support multiple content types, and provide detailed reporting on sitemap performance. This ensures your sitemap remains current and reflects your most recent website structure.

Warning: Not all automated solutions are created equal. Always verify that your chosen tool generates clean, compliant XML sitemaps and does not introduce unnecessary complexity to your website architecture.

In the next section, you will learn how to validate and fine tune your automated sitemap solution for maximum AI search engine effectiveness.

Step 3: Refine Sitemap Structure for AI Relevance

In this crucial step, you will learn how to strategically restructure your sitemap to maximize AI discoverability and create a more intelligent content hierarchy that enhances search engine understanding. The goal is to transform your sitemap from a simple list of pages into a strategic navigation blueprint for AI crawlers.

According to uit, utilizing sitemaps effectively involves carefully organizing and structuring information to improve accessibility for both users and AI systems. Start by categorizing your content into logical clusters. Group related pages together hierarchically prioritizing content based on its relevance and depth. For instance, create main category pages that link to specific subcategory and article pages, ensuring a clear and intuitive content flow. This approach helps AI systems understand the relationships between different sections of your website and interpret your content more accurately.

To refine your sitemap structure, implement a semantic approach that emphasizes content relationships and context. Include metadata such as last modified dates, content priority levels, and update frequencies. This additional information helps AI crawlers understand which pages are most important and how frequently they should be revisited. Learn more about making websites AI discoverable through strategic structural enhancements that go beyond traditional sitemap configurations.

Warning: Avoid creating overly complex or deep hierarchies that might confuse AI crawlers. Aim for a clear, logical structure with no more than three to four levels of depth.

In the next section, you will discover how to validate and test your newly refined sitemap structure for optimal AI recognition.

Step 4: Enable Ongoing Sitemap and Content Updates

In this critical step, you will establish a systematic approach to keeping your website sitemap dynamic and responsive to content changes. The goal is to create an adaptive system that ensures your sitemap remains an accurate representation of your website’s current structure and content landscape.

According to marcom, maintaining an up-to-date sitemap is essential during website content management. Implement a regular review process where you audit your sitemap monthly or quarterly. This involves checking for new pages, removing deprecated content, and updating metadata such as last modification dates and content priority. Automate this process whenever possible by utilizing content management system plugins or specialized sitemap generation tools that can track and reflect website changes in real time.

Research from edX emphasizes the importance of keeping sitemaps updated to ensure search engines can effectively discover and index your pages. Explore our comprehensive guide on website content updates to develop a robust strategy for maintaining your sitemap. Consider setting up automated scripts or using cloud services that can dynamically regenerate your XML sitemap whenever significant content changes occur. This approach ensures that AI search engines always have access to the most current version of your website structure.

Warning: Avoid manual sitemap updates that can become inconsistent or outdated. Prioritize automated solutions that provide real time synchronization.

In the next section, you will learn advanced techniques for validating and testing your updated sitemap to maximize AI discovery potential.

Step 5: Validate AI and Search Engine Discoverability

In this final step, you will implement comprehensive validation techniques to confirm that your website is fully discoverable and optimally indexed by AI search engines. Your goal is to verify that all optimization efforts translate into actual visibility and accessibility for intelligent search systems.

According to ieeexplore, validating web crawling efficiency requires a systematic approach to evaluating content discoverability. Begin by using tools like Google Search Console, Bing Webmaster Tools, and specialized AI indexing platforms to run comprehensive crawl reports. These tools will help you understand how search engines and AI systems currently perceive and interact with your website. Check for potential indexing issues such as blocked resources, slow page load times, or metadata inconsistencies that might hinder AI discovery.

Research from ischool emphasizes the critical role of metadata in improving web content discoverability. Explore our comprehensive guide on how AI finds websites to gain deeper insights into optimization strategies. Conduct manual and automated tests to verify that your structured data, schema markup, and XML sitemap are correctly interpreted. Pay special attention to how your content appears in AI assistant search results and make incremental adjustments to improve semantic clarity and relevance.

Warning: Do not rely solely on automated tools. Periodically perform manual checks by searching your website content through various AI assistants to understand real world discovery performance.

Congratulations. You have now completed a comprehensive process to optimize your website for enhanced AI and search engine discoverability.

Unlock Seamless AI Discovery with Automated Sitemap Optimization

Struggling to keep your sitemap up to date and structured for AI search engines? The “Sitemap Optimization Guide for Enhanced AI Discovery” highlights the challenges of maintaining clean, dynamic, and AI-friendly sitemaps that are crucial for visibility. You know how time-consuming manual updates can be and how confusing complex sitemap structures might block AI crawlers from finding your best content. Achieving continuous, real-time sitemap updates and intelligently organizing your site content is essential to stay ahead in AI-driven search results.

Experience how aeoptimer.com solves these exact problems by automating sitemap detection, structured data enhancement, and ongoing content updates without changing your website’s look. Our platform helps you boost AI discoverability effortlessly while aligning with current search engine trends. Don’t let outdated sitemaps hold you back. Start transforming your website’s AI visibility today by visiting aeoptimer.com and unlocking tools designed to keep your sitemap smart, clean, and optimized for AI. Your next step toward higher AI recognition and improved rankings is just a click away.

Frequently Asked Questions

How do I assess the current sitemap of my website?

Start by locating your XML sitemap at yourdomain.com/sitemap.xml and review its completeness. Ensure it includes all significant pages while filtering out duplicates or outdated content to enhance AI discoverability.

What automated solutions can I use for sitemap generation?

Implement plugins or modules from your content management system that can automatically generate and update your sitemap. This can save time and ensure your sitemap reflects real-time changes in content, enhancing AI indexing accuracy.

How can I refine my sitemap structure for better AI relevance?

Categorize your content into logical clusters and prioritize pages based on their relevance. Limit the hierarchy to three or four levels to maintain clarity for AI crawlers, ensuring they can easily navigate your site.

What ongoing updates should I make to my sitemap?

Regularly audit your sitemap at least monthly to add new pages, remove deprecated content, and update metadata.

How can I validate if my website is discoverable by AI?

Use tools to run comprehensive crawl reports and check for indexing issues, such as blocked resources or slow page speeds. Perform both manual and automated checks to verify that your sitemap and structured data are correctly understood by AI systems.

What metadata should be included in my sitemap?

Include essential metadata such as last modified dates, content priority levels, and update frequencies. This helps AI crawlers understand which pages are most important, improving overall discoverability.