Complete Guide to How AI Assistants Find Websites

Over 80 percent of online journeys now involve interaction with AI assistants, making website discoverability more competitive than ever. As digital content grows at lightning speed, understanding how AI systems identify and rank websites can make the difference between visibility and obscurity. This article breaks down the processes and cutting-edge methods behind AI-driven web discovery, offering clear insights for anyone looking to ensure their site stands out to intelligent digital agents.

Table of Contents

- How AI Assistants Identify Websites Online

- Types of AI Discovery Methods Explained

- Role of Structured Data and Sitemaps

- Optimizing Websites for AI-Focused Visibility

- Common Pitfalls and How to Avoid Them

Key Takeaways

| Point | Details |

|---|---|

| Website Discovery Process | AI assistants utilize web crawling, semantic analysis, and ranking algorithms to identify and evaluate online content. |

| Importance of Structured Data | Structured data and sitemaps significantly enhance AI discoverability, providing clear, organized information for better comprehension. |

| Optimization for AI Visibility | Websites must adopt AI-focused optimization strategies such as semantic structuring and comprehensive metadata to improve visibility. |

| Common Discoverability Pitfalls | Websites should avoid inconsistent structures, over-optimization, and unclear semantics to enhance their chances of being recognized by AI systems. |

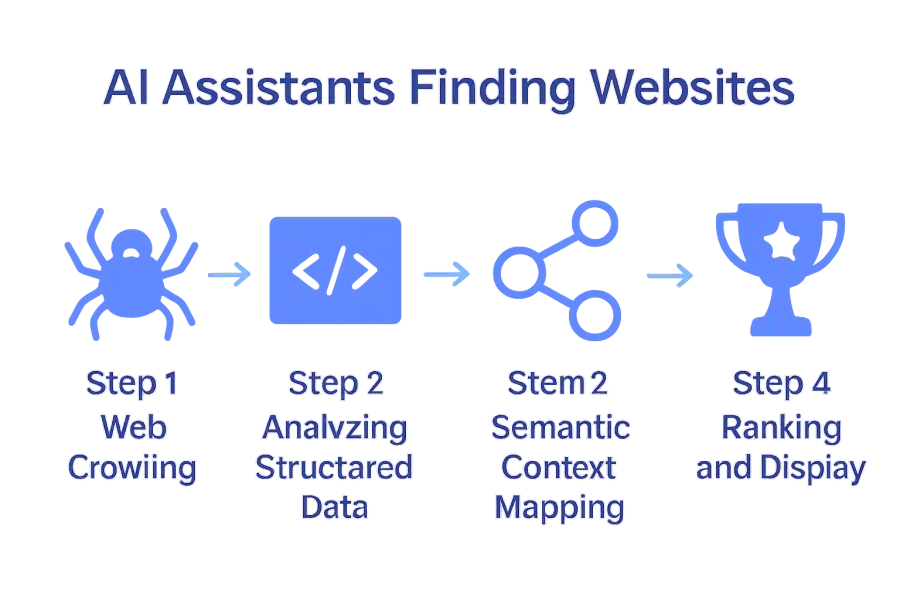

How AI Assistants Identify Websites Online

AI assistants have sophisticated mechanisms for discovering and evaluating websites across the digital landscape. Web discovery involves complex algorithms that systematically crawl, analyze, and index online content. According to research from arXiv, the Discovery Engine framework enables AI to synthesize knowledge by transforming disconnected information sources into a unified, computationally tractable representation.

The process of website identification typically involves several key stages. First, AI assistants use advanced web crawling technologies that systematically scan the internet, following hyperlinks and mapping digital connections. These crawlers examine multiple dimensions of a website, including:

- Structural metadata

- Content relevance

- Link networks

- Update frequency

- Technical performance indicators

As arXiv research suggests, modern agentic AI systems are evolving to autonomously perform complex information retrieval tasks. This means AI assistants can now go beyond simple link following and actually comprehend contextual relationships between different web resources. They analyze not just what a website says, but how it connects to broader knowledge networks.

The final stage involves sophisticated ranking algorithms that determine a website’s credibility, authority, and relevance. These systems consider hundreds of signals including domain age, content quality, user engagement metrics, and cross-referencing with other trusted information sources.

By combining computational power with intelligent pattern recognition, AI assistants create increasingly nuanced methods of website discovery and evaluation.

For website owners seeking to improve their AI discoverability, understanding these identification processes is crucial. Making Websites AI Discoverable: Step-by-Step Guide offers comprehensive insights into optimizing online presence for AI recognition.

Types of AI Discovery Methods Explained

AI discovery methods are sophisticated technological approaches that enable intelligent systems to find, analyze, and categorize online information. According to ICAEW, these methods are primarily categorized into two fundamental learning paradigms: supervised learning and unsupervised learning. Supervised learning involves training models with labeled data to predict specific outcomes, while unsupervised learning allows models to independently identify patterns within unlabeled datasets.

The primary AI discovery methods can be further broken down into several key techniques:

- Crawling and Indexing: Systematic web scanning

- Link Analysis: Evaluating connections between websites

- Content Recognition: Understanding textual and semantic contexts

- Semantic Network Mapping: Identifying relationships between information sources

- Machine Learning Classification: Categorizing websites based on learned characteristics

As Wikipedia explains, domain-driven data mining represents a sophisticated approach that integrates domain-specific knowledge directly into the discovery process. This method enhances the relevance and precision of discovered information by contextualizing data within specific knowledge frameworks. Such techniques allow AI assistants to move beyond simple keyword matching and develop more nuanced understanding of web content.

The evolution of these discovery methods continues to push technological boundaries. Modern AI systems now employ complex algorithms that can recognize subtle contextual relationships, understand content intent, and dynamically adapt their search strategies. By combining computational power with intelligent pattern recognition, these methods create increasingly sophisticated approaches to website and information discovery.

Website owners seeking deeper insights into how AI discovers online content might want to explore our What Is AI Search? Complete Overview for 2025 resource for more comprehensive understanding.

Role of Structured Data and Sitemaps

Structured data serves as the critical backbone for AI assistants to efficiently discover and comprehend website content. According to Wikipedia, automatic basis function construction enables AI systems to simplify complex data into more manageable representations, which is fundamental for accurate information processing and decision-making.

Structured data provides AI assistants with clear, organized information about a website’s content through several key mechanisms:

- Schema Markup: Provides explicit context about page content

- JSON-LD Annotations: Enables machine-readable metadata

- Semantic Tagging: Helps define relationships between content elements

- Metadata Descriptors: Offers precise information about page attributes

- Hierarchical Content Classification: Supports intelligent content understanding

As Wikipedia explains, inductive logic programming allows AI systems to learn from examples and background knowledge, significantly enhancing the interpretability of structured data. Sitemaps play an equally crucial role by providing a comprehensive blueprint of a website’s structure. They act as a navigational guide for AI crawlers, helping them efficiently map and index web content.

The integration of structured data and sitemaps creates a symbiotic relationship that dramatically improves website discoverability. By providing clear, machine-readable information, websites can signal their relevance and content structure more effectively to AI assistants. This approach ensures that complex digital content can be accurately interpreted, categorized, and surfaced in search results.

For website owners looking to optimize their online presence, Examples of Search Engine Optimization for Sites offers additional strategies for improving AI and search engine visibility.

Optimizing Websites for AI-Focused Visibility

Website optimization for AI discoverability requires a strategic approach that goes beyond traditional search engine optimization. According to Wikipedia, literature-based discovery demonstrates how structured content can help AI systems uncover new relationships and insights, making content organization crucial for visibility.

Key strategies for enhancing AI-focused website visibility include:

- Semantic Content Structuring: Creating logically connected information

- Comprehensive Metadata: Providing detailed context for AI interpretation

- Clear Hierarchical Information: Organizing content with precise categorization

- Contextual Interlinking: Establishing meaningful connections between content pieces

- Natural Language Optimization: Writing in clear, comprehensible formats

As Wikipedia explains, automated identification systems rely on precise, well-labeled data to generate accurate classifications. Similarly, AI assistants depend on websites presenting information in easily digestible, machine-readable formats. This means developing content that not only informs human readers but also provides clear signals and pathways for AI systems to understand and index.

The ultimate goal of AI-focused optimization is creating a transparent, accessible digital environment where content can be quickly understood and contextualized. Websites must balance technical optimization with genuine, high-quality information that demonstrates expertise, relevance, and depth. By implementing these strategies, businesses can significantly improve their chances of being discovered and ranked favorably by AI discovery mechanisms.

For a comprehensive roadmap to achieving these objectives, website owners can explore our Why AI Optimized Websites: Complete Guide 2024 for in-depth insights into modern AI visibility strategies.

Common Pitfalls and How to Avoid Them

AI discovery presents complex challenges for websites seeking optimal visibility. According to arXiv, understanding and explaining neural network decision-making processes is essential to prevent misinterpretations and identify potential biases that could impact website recognition.

Common pitfalls that websites encounter in AI discoverability include:

- Inconsistent Content Structure: Creating unpredictable information layouts

- Over-Optimization: Attempting too many technical manipulation techniques

- Lack of Semantic Clarity: Using ambiguous or overly complex language

- Insufficient Metadata: Providing minimal contextual information

- Neglecting Regular Content Updates: Allowing website information to become stale

As arXiv research highlights, agentic AI systems require robust evaluation metrics and collaborative approaches to maintain reliability. This means websites must focus on creating transparent, consistently structured content that provides clear signals to AI discovery mechanisms. Avoiding algorithmic manipulation and concentrating on genuine, high-quality information becomes paramount.

The most effective strategy involves maintaining a balanced approach that prioritizes human readability alongside machine comprehension. Websites should develop content that flows naturally, uses clear semantic structures, and provides comprehensive context without appearing artificially engineered. Regular audits, continuous learning, and adaptability are key to staying ahead of evolving AI discovery algorithms.

For website owners looking to dive deeper into avoiding these common pitfalls, our How to Audit Website for AI: Step-by-Step Visibility Guide offers comprehensive strategies for maintaining optimal AI discoverability.

Boost Your Website’s AI Discoverability Effortlessly

The article reveals just how complex it is for AI assistants to identify and rank websites with precision. You might be feeling overwhelmed trying to keep up with ever-changing AI algorithms that rely on structured data, semantic content, and constant updates. The challenge is real: How do you maintain AI-friendly content that is both easily understandable by smart systems like ChatGPT or Gemini and remains engaging for your human visitors? Avoid common pitfalls like inconsistent metadata or outdated sitemaps that can reduce your website’s visibility drastically.

Take control of your website’s AI recognition today with aeoptimer.com. Our platform automates key tasks such as adding structured data and updating your content behind the scenes without altering your site’s look. By simply placing one small script on your pages, you get ongoing optimization that keeps your site aligned with the latest AI discovery techniques discussed in the guide. Discover how effortless it is to stay ahead in AI-focused search by exploring our complete AI visibility strategies. Ready to transform your site into an AI-friendly powerhouse? Visit aeoptimer.com now and get started with a solution designed to increase your AI-based traffic without technical headaches.

Frequently Asked Questions

How do AI assistants discover websites online?

AI assistants discover websites through advanced web crawling technologies that systematically scan the internet, analyze online content, and follow hyperlinks to map digital connections.

What are the key factors that AI assistants use to evaluate websites?

AI assistants evaluate websites based on factors such as structural metadata, content relevance, link networks, update frequency, and technical performance indicators.

How can websites improve their discoverability by AI systems?

Websites can improve AI discoverability by implementing structured data, providing clear metadata, maintaining a semantic structure, and ensuring regular content updates to signal relevance and clarity to AI assistants.

What role does structured data play in website discovery by AI?

Structured data provides AI assistants with organized and machine-readable information about a website’s content, helping them understand and categorize the information effectively.